NRSDK Coordinate Systems

NRSDK Coordinate Systems

Unity-based Coordinate Systems

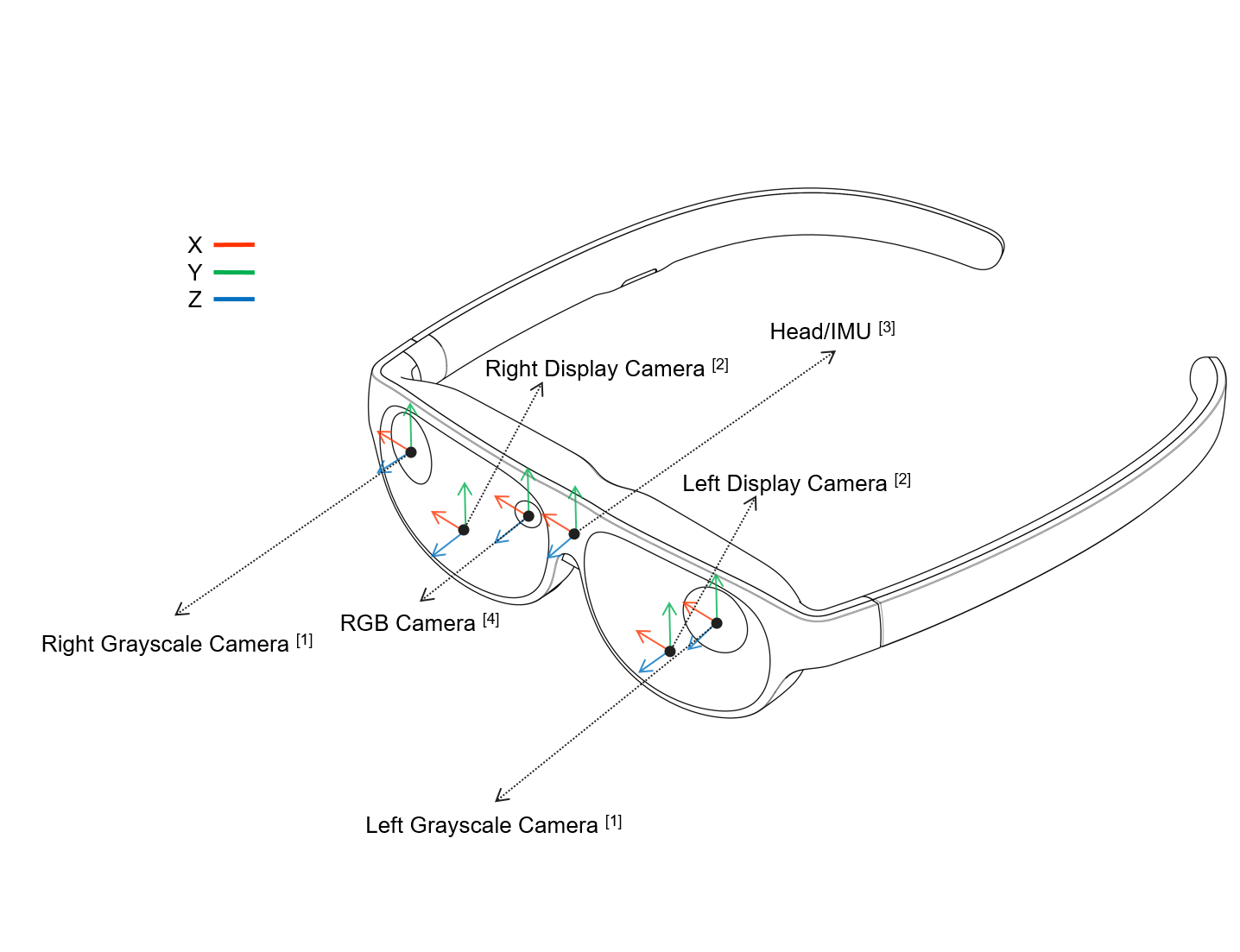

XREAL Glass Components and Their Unity-based Coordinate Systems

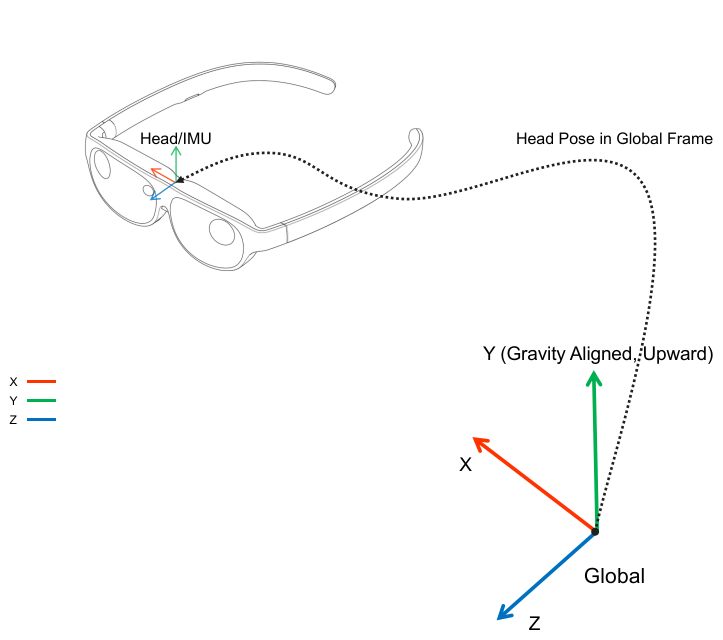

Interface for Head Pose

Interface for Extrinsics Between Components

Example 1: Getting the Extrinsics of RGB Camera From Head

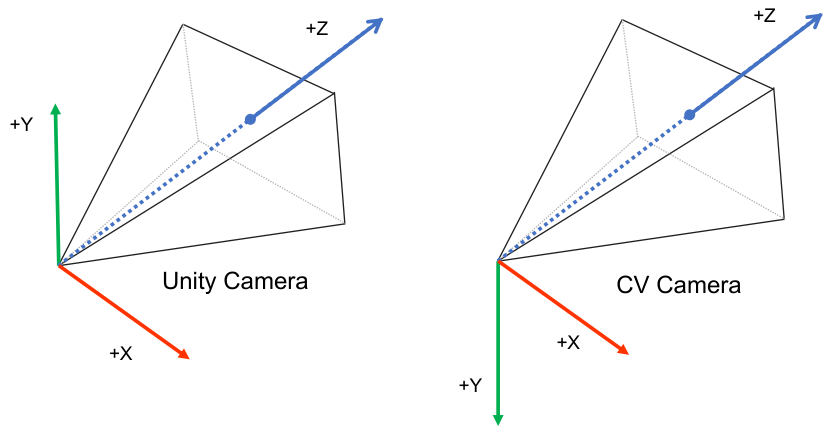

Converting to OpenCV-based Coordinate Systems

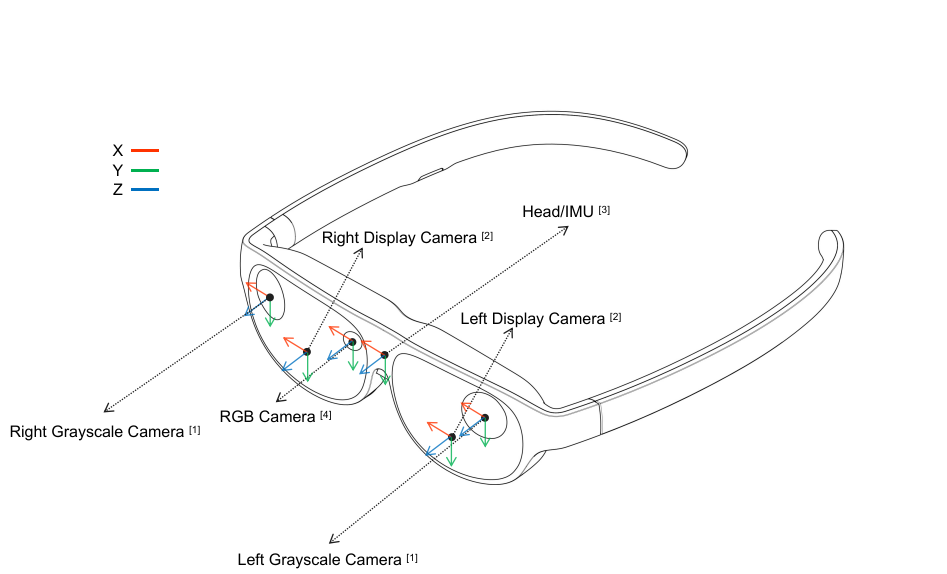

Xreal Glass Components and Their OpenCV-based Coordinate Systems

Converting Extrinsics: From Unity to OpenCV

Example 2: Converting the Extrinsics of RGB Camera From Head to OpenCV

Example 3: Getting the Extrinsics of Right Grayscale Camera From Left Grayscale Camera in OpenCV

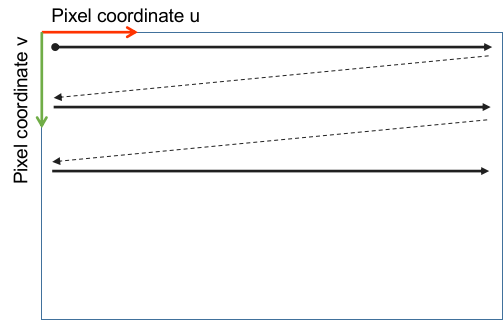

Image Pixel Coordinate System and Camera Intrinsics in OpenCV

Interface for Camera Image Data

Example 4: Getting the RGB Camera's Image as Raw Byte Array

Interface for Camera Intrinsics and Distortion

Example 5: Getting the RGB Camera's Intrinsic Parameters

Was this helpful?