NRInput

To enable input support for XREAL device (Controller and Hand tracking), simply drag NRInput prefab into your scene hierarchy. It is used to query virtual or raw controller state, such as buttons, triggers, and capacitive touch data.

Raycast Mode: Choose between Laser / Gaze interaction. Laser is the default raycasting mode most apps will be based on. In this mode, the ray will start from the center of the controller.

Input Source Type: Choose between Controller / Hands. Hands will enable hand tracking capability.

You may leave other fields unchanged.

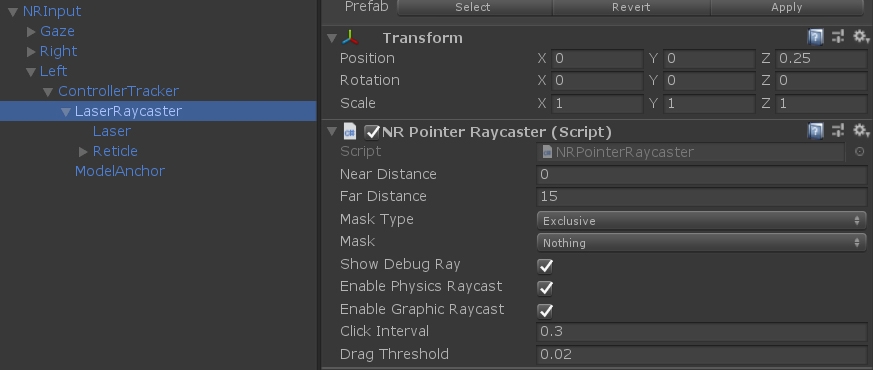

Raycasters in NRInput

The raycaster class inherits from Unity's BaseRaycaster class. A chosen raycaster's farthest raycasting distance can be modified directly from the Inspector window. You can also define which objects are interactable by changing the parameter of their Mask.

Handle Controller State Change

The primary usage of NRInput is to access controller button state through Get(), GetDown(), and GetUp().

Get()queries the current state of a controller.GetDown()queries if a controller was pressed this frame.GetUp()queries if a controller was released this frame.

Sample Usages:

You could also add listeners to controller buttons:

Sample Usages:

Other Controller Events:

Change Controller Behaviour

Getting Controller Data

Sample Usages:

Get Frequently Used Anchors

The NRInput provides an easy way to get frequently used root node for gaze and laser quickly.

Sample Usage:

Interact with GameObject

Please inspect CubeInteractiveTest.cs which handles Unity events when interacting with gameObject this script attached to. Be aware that the gameObject must has a Collider component in order to receive the event. For a full list of supported Unity Event, please refer to: https://docs.unity3d.com/Packages/com.unity.ugui@1.0/manual/SupportedEvents.html

Interact with Unity UI

Integration with Unity's EventSystem supports user interaction with UI System. Please be aware in order for the Unity UI to respond to raycast and receive unity events, you must remove the default Graphic Raycaster component and attach Canvas Raycast Target component on Canvas.

In this way, you may add event callbacks on Unity UI elements such as Button, Image, Toggle, Slider, etc. For example, On Click() on button:

Last updated

Was this helpful?